Learning from demonstration is a powerful method for teaching robots new skills, and having more demonstration data often improves policy learning. However, the high cost of collecting demonstration data is a significant bottleneck. Videos are often regarded as a rich data source for containing behavioral, physical, and semantic knowledge, but extracting control-specific information from them is challenging due to the lack of action labels.

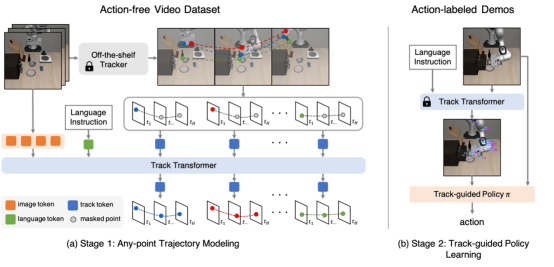

To overcome this challenge, Prof. Yang Gao’s research team from the Institute for Interdisciplinary Information Sciences (IIIS) at Tsinghua University introduces a novel framework: Any-point Trajectory Modeling (ATM). The model utilizes video demonstrations by pre-training a trajectory model to predict future trajectories of arbitrary points within a video frame. Once trained, these trajectories provide detailed control guidance, enabling the learning of robust visuomotor policies with minimal action-labeled data. This algorithm provides a new perspective for few-shot and cross-embodiment robot learning. The work has been accepted by the Robotics: Science and Systems (RSS) 2024.

Compared to image generation, points naturally capture inductive biases such as object permanence, and decouples relevant motion of objects from lighting and texture. This achieves cross-embodiment correspondence between human and robots. ATM first pre-trains a language-conditioned track prediction model on video data to predict future trajectories of arbitrary points within a video frame. Using these learned track proposals, policies can be trained with limited action-labeled demonstration (e.g. 10 demonstrations per task).

This algorithm introduces a novel approach to robot learning from videos, enabling few-shot and cross-embodiment imitation learning. It has demonstrated remarkable efficacy across more than 130 language-conditioned tasks in both simulated and real-world environments. Overall, ATM surpasses robust video pre-training benchmarks by an average of 80%. Additionally, this study showcases the successful transfer learning of manipulation skills from human videos, underscoring its effectiveness in practical applications.

The title of the paper is Any-point Trajectory Modeling for Policy Learning. The co-first authors of the paper are IIIS doctoral student Chuan Wen, UC Berkeley postdoctoral fellow Xingyu Lin, and Stanford master student John So. Other authors include Assistant Professor Qi Dou and Dr. Kai Chen from the Chinese University of Hong Kong. The advisors are UC Berkeley Professor Pieter Abbeel (corresponding author) and IIIS Assistant Professor Yang Gao.

Paper Link: https://arxiv.org/pdf/2401.00025

Project Webpage: https://xingyu-lin.github.io/atm/

Editor: Yueliang Jiang

Reviewer: Xiamin Lv